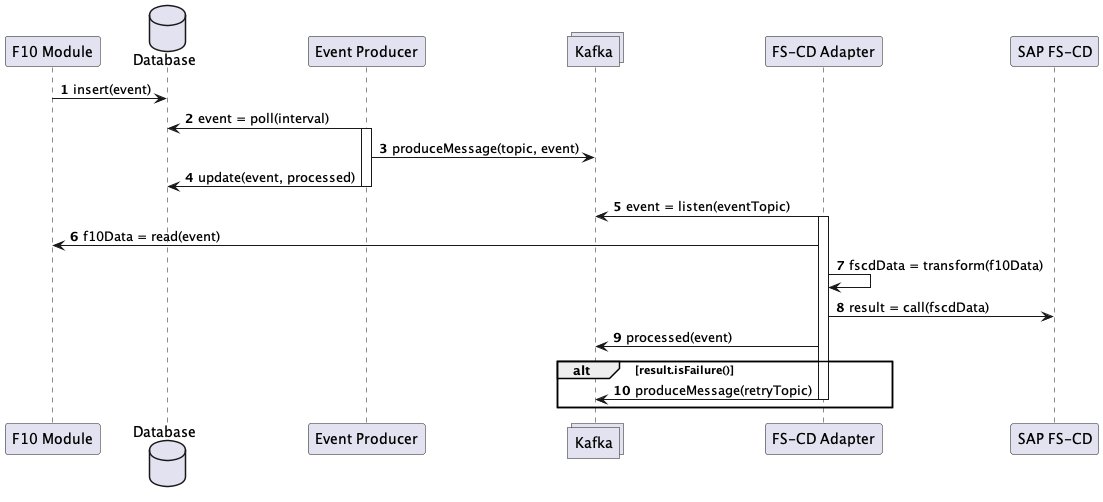

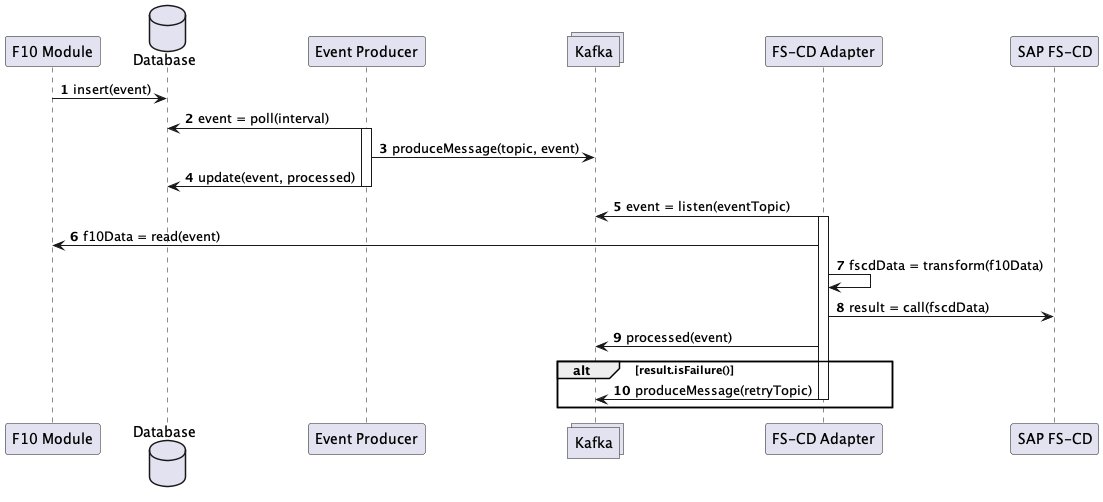

Overview

Failure Scenarios and Resolution

Consistency by Step Failure

-

Faktor Zehn Module: Failure of step 1 does not lead to inconsistencies, because the atomicity property of the database transaction ensures that the business data and the event are committed together or not at all.

-

Kafka Publisher: Failure of steps 2 or 3 do not change the system’s state and can be retried. Failure of step 4 leads to duplicate messages, because the event will be reprocessed with the next poll. However, because the consumer is idempotent, this will not lead to inconsistencies.

-

Kafka: Kafka failures result in failure of steps 3, 5, 9 or 10.

-

FS-CD Adapter: Failure of step 5 to 8 do not change the system’s state and can be retried. Failure of steps 9 and 10 lead to duplicate processing but not to inconsistency (idempotent consumer).

Transient vs Persistent Failures

There are two kind of failures:

| Kind | Properties | Example | Resolution |

|---|---|---|---|

Transient |

Temporary, non-deterministic, self-healing |

network hiccup, database locks |

Can be automatically resolved by reprocessing. |

Persistent |

Permanent, deterministic / reproducible, not self-healing |

Software bug, configuration error, malformed payload |

Requires manual analysis and resolution before reprocessing. |

Consumer Retry Mechanism

If any of steps 6, 7, or 8 fails, an exponential backoff retry mechanism is employed[1], retrying the processing after progressively longer intervals to automatically handle transient failures.

Once the maximum number of retry attempts is reached without success, the message is deemed unsuccessful. It is then published to a so-called dead-letter topic for manual root cause analysis.

If the root cause analysis finds the failure to be persistent, the root cause needs to be fixed. This often requires code- or configuration changes before reprocessing the message.

If the failure is found to be transient, the message can be reprocessed once the transient issue has been resolved.